Bay area students technology Salon (www.Tech-Meetup.com) invitation Baidu Yu Kai, Deputy Director of the Institute this Saturday at Intel headquarters in Santa Clara Auditorium do share a very dry. I think "dry goods" and not just because Yu Kai, such as "why Baidu to do various intelligent hardware? "And" automatic driving and Baidu Google any different? ", Problem for has answered, but because through this share, we can see Baidu in including based on big data of depth learning, some frontier research field of results, and in" everything interconnected "of big data times, Baidu in robot, and no driving, aspects of some thinking, also let we these Internet practitioners for future big data and artificial intelligence of evolution has a better of understand.

While the audience filled the Hall, I estimate that there are up to 200 laihao people to share these things. So I'm finishing the speech to Yu Kai, Lei feng's screen readers to understand and learn.

According to the following address: the

Thank GUO Xiaofeng, Zhu ping have a lot of friends over the weekend to organize this activity. Go back to this place for me and to share a feeling of home, since I was three years ago when coming back from the Bay area to join Baidu, Baidu became responsible for the study of artificial intelligence and depth of learning. Very close to them. Review this history is full of interesting, I was in the NEC Lab, there is a lot of work on deep learning, like Facebook, today there are many people engaged in deep learning, many are joining from NEC Lab. Back from the Bay area to China did give back some of the resources of the Bay area, as I had a good friend, Andrew Ng, I have to let him talk to Baidu.

What does this reflect? Many of these advanced technology research and development used to be in Silicon Valley in the United States, today in Shenzhen, Beijing or a lot of the same things are happening, so I think it is a time of innovation.

Today I called from the data to the artificial intelligence. In the past couple of years, Baidu has more characteristics of point is that as a private enterprise in the r did a lot of work in this area, is a very encouraging thing for us.

I think even on Google friends will agree with this opinion: the search engine itself is an artificial intelligence system, through free services provide much of the data on the one hand, on the other hand the data area, it will use a lot of technology, this is the most important artificial intelligence based on data, such as data mining, machine learning, natural language understanding or IOT era in the mobile age, Many artificial intelligence technologies such as speech recognition, speech understanding and image recognition in the Middle can play a very important role.

What is artificial intelligence

What is artificial intelligence? There are many different opinions, strong artificial intelligence, weak artificial intelligence, we also see a lot of movies and novels, but today there is no single accepted definition of unified, but we tell an artificial intelligence have several aspects:

First is the perception. Is the collected data;

The second is understanding. Object has some understanding of the environment and dialogue;

Third was decision-making. All you have to do a lot of analysis of these data, know what kind of environment is, this way you will make a lot of decisions, itself, perception, understanding, decision-making is a three-step cycle of processes.

We see all kinds of so-called intelligent products today, what about whether these products really smart? An essential difference is Internet service and other products is not the same place, is whether the services and products will evolve with experience, as more and more users to users more and more understood that this evolved with experience or the ability to learn in practice is to assess whether a product is really intelligent. The entire mobile Internet, by phone or through the App, he is continuously to the user to know, to understand the user's needs and preferences.

We know the course in machine learning research, there is a word called empirical data and experience data, that data is experience. We have talked about today is the age of big data, where the meaning of the age of big data? Is the ability to make a system will become more and more intelligent. Because the smart feature is the ability to learn.

All things Internet and data

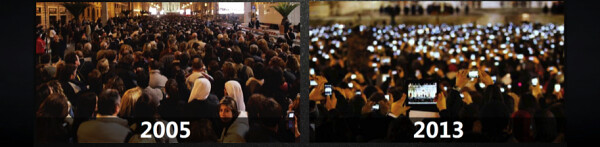

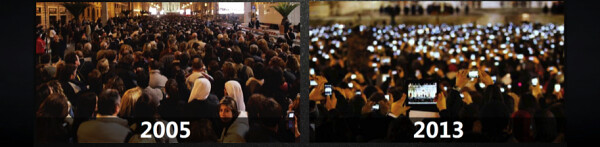

Data from the PC to the Internet to the mobile Internet, there is a rapid change, scenes like this picture is 05 papal election, after 8 years as you can see, the same place, as a wave of people, mobile Internet for change is enormous. Everyone with this device, either on the phone, take pictures, with me, you think you're not using it, but mobile sensors will put a lot of data to the cloud. Data is day.

We will enter an era of Internet of things era or robot, the interconnection of all times is the kind of scene it? We can imagine that by 2020, Masayoshi Son said at Wuzhen Internet Conference some time ago that by 2020 one might connect to thousands of devices. Today in China, each of us may take two or three cell phones, because a person may play many roles in life (laughter). With wearable devices and other connections, in China with an average a person with three or four devices is very normal. A button might have for the future is a device, so that everyone is connected to 1000 devices is not so sensational.

So much of the result of connections is data explosion. Data is actually made up of all things in the era of Internet leads, but the data also gives an opportunity to learn of our services and products. From academic research of angle told, artificial intelligence in past fifty or sixty years in up downs v, but real mass of application is from Internet is 2000 began of, from 2000-2009, I put this times called "run real fine silent", because in Internet times, regardless of is search also is advertising are has large of artificial intelligence technology, but these technology main is some background of technology, does not necessarily so easy was perception.

Our current era, from 2010 to 2019, I used a word called "the silent Thunder", the Internet industry many friends really do feel the rumble of Thunder. Big data, computing power, bandwidth, deep learning this technique starting with the development of AI from background to foreground, such as speech recognition, I remember that I created three years ago, Baidu's speech recognition team, we found no one at that time, I find it very strange, I remember when I was in College was not a lot of people are engaged in speech recognition research? I ask about, they all turned up. Because in the era before deep learning, speech recognition is no hope, we can't continue to do research, but today's speech recognition is coming up, and is developing very fast. Include images, including natural language understanding, including robot technology is so.

From the great depth of data to study artificial intelligence

Artificial intelligence why such attention? I think the most important reason is large, the second reason is that computing power, and the third reason is that deep learning. Top Internet companies have a considerable input in this field, Baidu in the depth of field of study can be said to be one of the largest investment companies. Cath Kidston iPhone 6+ plus covers

Learn why depth attention? First the 1th deep learning technology has a romantic side, is the mechanism of deep learning, behavior, and brain has some relevance. That emphasized more in the media, but from our point of view this is the most important reason.

2nd deep learning is particularly suitable for large data. Inspired a deep learning is influenced by biological nervous system, but then the progress is mainly because statistics, modeling, data and functions.

3rd then it leads to a change in thinking is problem solving. We usually do some preprocessing the data, then machine-modeling approach to data, but the depth of study changes, is the study of end-to-end, want to put the raw data into the system, each step is accomplished by methods of learning. For example, speech recognition, it is divided into several steps, but these steps are to consistency optimization? Not necessarily. But consistency of deep learning goal is to optimize the final goal.

The 4th is also a correct, just a lot of people feel that deep learning is a black-box system, thinks you do not need to know too much, just go with it just fine. In fact deep learning and machine learning, provide a framework, a set of language systems. What is language? Such as Chinese is a language system, you want to write beautiful articles need at least two conditions, firstly, you have to master the language, and the second is your perception on life. Form in depth study, there are two conditions, first you have to have the ability to harness this model and calculation, the second is the must have sufficient understanding of a problem.

I'll give you some examples. Deep learning one of the most successful example is the volume and neural network, he did and our understanding of the Visual system in particular relation between early visual cortex cells is very large. Today our deep learning has gone far beyond the structure of these models. As an analogy, why don't they the same? This is like how birds fly, but deep learning is like a Boeing study on how to make an airplane, in essence is not the same, our planes today looks nothing like bird, more aerodynamic and mechanical side of things.

From the perspective of statistics and calculation, deep learning of the reason Foundation. A machine learning system, we could each source on the error to do decomposition and to understand and control it, so you can control the overall prediction errors. General machine learning, we can make some assumptions, we know all the assumptions are not perfect, that is, the first model is not perfect. The second problem was caused by imperfect data, such as data is limited, and a third is the calculation of the imperfect. Statistics usually take care of the first two issues, but in reality, for example one of my engineers say, boss, this question needs 500 machines. I said, bullshit, I can give you 50. He said 50 would be, I need to be for half a year. Then I said, no, must work out this evening. Limited computing resources to deal with the problem, we must take into account this is not perfect.

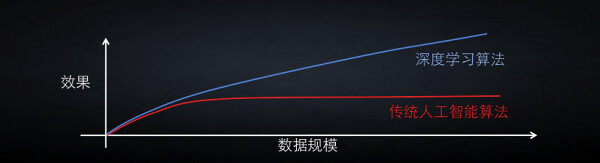

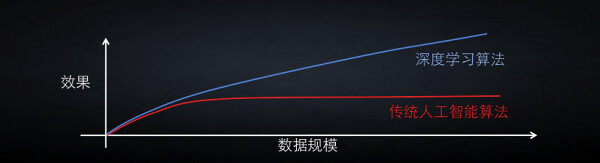

So we can see that, first we need a very complex model, to reduce this disparity, the second question we need unbiased, the great data to make up for it. So we came to the conclusion: deep learning compared with traditional artificial intelligence, along with data growth dividends of absorb data growth. Traditional artificial intelligence model may not be sophisticated enough, for example, is a linear model, large after large amount of deviation in your data. Is also possible model is very good, but the problem cannot be resolved. For example, there is a very good model and its computational complexity is n the quadratic to cubic, handles 10,000 and 1 million samples a sample set, needed at least 10,000 times times the computing resources. In the age of big data, it can only go so far.

Deep learning is a set of flexible and complex language system. Frames in different languages in different issues, with different structures to deal with different problems. Baidu now can walk in front of the pretty, tell from size, Baidu may be the world's first large-scale GPU Servers company, 12 years time, when we purchase the largest purchase in the world. Cath Kidston iPhone 6s plus

Today, we have five thousand or six thousand units of the PC Server, more than 2000 GPU Server to make this massive depth of learning and training, so we are now able to build such large and qianyiji parameters of the world's largest artificial neural networks. Many algorithms in other areas such as speech recognition, image recognition, natural language, advertising, user modeling is a great help.

Today we have not stayed at the research level, but Baidu's business search, ads, images, voice, for example, because the depth of study promotion is very large. Throughout the business's role has become more intelligent, in a nutshell, our business model is to connect people and information, connect people and services, how can you understand people's intentions, such as a keyword, a photo, say a Word, how to match user needs, how to push information and services.

The application deep learning examples

Phoenix nest is an example of a deep learning to attain realization, over the past two years on the click-through rate and improvement of search satisfaction is enormous.

To cite an example, how can we improve search relevance. How does it do it? Roughly of thought is, assessment correlation past we to do many features of extraction, today we put user of query and results match get a scores, in neural network in for compared, dang in training of when, we will put user reflected out of this preference to to a differences enough big of scores, I with over 100 billion of of sample to training this system, in past two years inside led to correlation has has a huge of upgrade.

This bring more relevance to the semantic understanding of the particular query might be less than 10 times a day the long tail queries, which are the most tested the ability of search engines, because for very high-frequency queries, each search engine is much the same. Here's an example is a Maserati car front license plate, our systems are largely based on keyword matches, did not answer the question. Our partners (Google) results matching the "front" the this keyword, but no further semantic understanding. After we ran our model, you you can see query problems are "front", "the place plate", but found "licence before how do", it's not using keywords to match, but according to semantic matching, a change from this is the depth of learning.

There are examples of speech recognition. Baidu's speech recognition practical starting from 12, but deep learning makes the past highbrow stuff into something you can do with large data. Speech recognition in the past from an acoustic angle feature characteristics such as frequency, to extract it becomes a factor, and then from the bottom to the top of the layer-by-layer process. At the beginning we do not care what it is, but just consider what it reads like, deep learning as much as possible today can step into train the Middle step, become intermediate steps, without too much manual intervention, training benefits of a lot of data to the model. Benchmark (benchmarking tests) we can get a very good result.

Going to give you an example of this is AWB handwriting recognition of phone numbers, we started testing, cutting, cut after cut out each number recognition, but as this example, you will find that can't be separated, you can put it into a one of the decoding. This is brought about by deep learning is not reflected in a black box, but a very rich language, we hope to have sufficient understanding of these issues, and to develop the most suitable model.

I mentioned the picture recognition, coupled with character recognition, and speech recognition and machine translation, that Baidu can make products, such as today's Chinese people to the streets of New York to ask: "where there's Sichuan restaurant? "You say recognition in Chinese into English, and then translated into English. We can optimize this thing in a few years ' time can become a reality, so children can be more time to play and less time learning boring English (laughter). Of course, photos. This is a good friend of mine, a professor at NYU in Shanghai, he used this product a la carte, menu, he does not have to worry about it is not chicken legs, like he was afraid to eat anything.

Let us look at another example is a picture identification. 13, when receiving a mobile Internet application is Baidu's magic map, is that ordinary people can take a photo, and the system will tell you which lady looks the most like. Our products ranked first in the iOS General chart for three consecutive weeks, up to 9 million a day when people upload photos, we collect all of a sudden a lot of faces (laughter). So far this is Baidu record of mobile products.

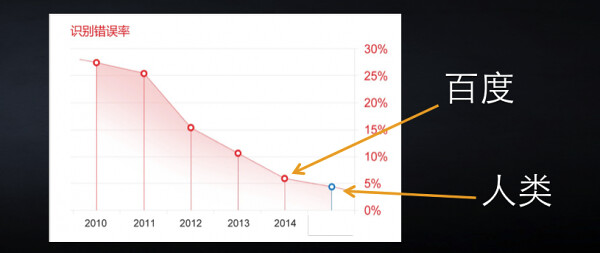

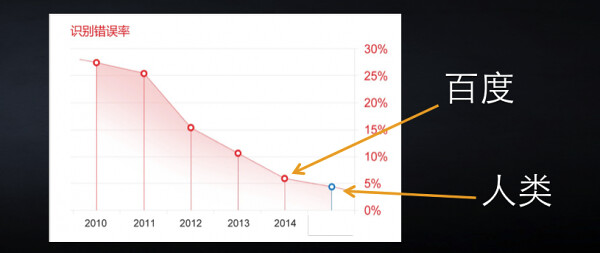

Many high-tech companies will join an IFW race, that is, evaluation of face recognition, what is it all about? Are some photos, system to determine which belong to the same person or different people. Last year, Facebook announced that they have achieved the best results. But different companies are constantly PK, for now the best results of Baidu's (error rate of approximately 0.15%), what did the results now? Some let people come to this assessment, results about this place (about 0.35%), which means that machines might be better than people. Of course, this is just a specific task, performance machines than humans, can reach the point of people does not represent the entire artificial intelligence.

There is also a very interesting ImageNet, that is, image recognition and classification of the race. People from different backgrounds may not touch, but we can hear the rumble of Thunder in the industry, due to the large data, due to the depth of learning in progress very quickly. ImageNet do of is pictures of classification, past five years in, we can see errors rate in constantly declined, 10 of first session, I of this team is took has first name, behind annual results are in changes, last year best of results is we in Baidu of colleagues do of, Stanford also also do has a assessment, see human to do this test results will is how.

(ImageNet) Microsoft has released in January this year exceeded Baidu, Google some time ago a colleague's result was better than Microsoft, last week, my colleagues and I said our results than Google. So this is very interesting, many years later, we recall, researchers at our different companies like a PK class classmates, share experiences, actually promoted the development of industry.

OK, image understanding always is, and our knowledge of human understanding and language always coupled together, how does that make this thing even more interesting? We were probably doing "talking pictures", words to describe this image, such as this picture, description is "has a white sofa in the living room and the blue carpet in the afternoon sunshine into the room." This description was actually done by a machine. This is Baidu last year researchers published a paper in the world at the earliest, then Google have published similar papers, reference our research results.

We are also doing some description of the Chinese, such as this example: "a double-decker buses in the streets." What can we do about it? For example, image recognition, we find similar pictures, images may be the (Street), because the main content of the image it is the buildings and streets. But if we use natural language to describe the scene, our images are not the same. We have Baidu how to use natural language to enhance our understanding of the image, the model behind it again proved a point I raised just now: deep learning is a language system actually provides, for a specific problem, you have to have the ability to build models to simulate such a problem. One such example is Convolutional neural networks is a depth below, it produces a representation, which is a model of multilayer neural networks to produce language. The result of this is that, it is in line with our semantic language, but also reflects the content of the image.

We go further: we can do something more human-like thing to do. For such an image, for example, we can teach children to learn something. This image, the machine may ask children: he stood on the what? "He" is the single next to him, rather than a woman next to the word "she". We hope that the machine can answer that question, according to the pixels of the image itself, based on its own questions to answer: he's standing on a surfboard. Machines may also continue to ask: did he have a coat? Machines can then answer: No. This is today the picture automatically generate deep learning of neural network. It has gone beyond the images of our past, that is, "do you have anything" becomes "what meaning do you have" and "what kind of relationship you have."

Reflection on the future, artificial intelligence robots and automatic transmission

Here to return to our reflections on the present situation and future of artificial intelligence: the Internet service in the past, it does also have several aspects, one is a perception, a different understanding, there is a decision. Perception is getting data, largest of the calculation, such as processing, indexing, and decision to show what kind of results and service. These are all on the line. Today, mobile Internet and closer to the people, and the scenario in which you have a relationship. From a perception standpoint we want from humans, obtaining information from the physical world, this line information may be more important than the Internet world. From the perspective of service, before service on the line, it will extend below the line, extension to traditional industries. Intelligent hardware, autopilot, robot, will play a more important role in the future.

Here are some of many intelligent hardware we do try (showing an automated driving videos), probably with a variety of sensors in the future armed to the teeth. Why do you want to drive in China? This is a purely technical problem, some conditions. I told my colleagues sometimes joke that our automatic driving in China, the technology must be the world's, but Google in the United States technology is not the whole world, it could not deal with a Chinese-style across the road, it can't handle the manhole covers were pried off (laughter). Because smart artificial intelligence is based on data, and you absolutely can't do without these data. Technology we developed in this environment must be universal.

This is based on the image of the real time road scene understanding, integration, technology of deep learning, our route, traffic signs, road vehicles, marking must be done in real time identification. Today we can do what degree? A Benchmark in this regard, we can see that first and second are Baidu, and very much better than third-place results. This is the one we did not train people to identify data (traffic), we will see that the machines do better than people. Like this man, and traffic signs cover the back of the car, revealing only a fraction of the people can't sense the existence of this vehicle, but the machine can be identified. So driving can be more security based on sensor. Elon Musk said a while ago, humans it is illegal to drive in the future, this may become a reality.

This is an understanding of the lane. Said, why do we have to do our positions? We have a realistic goal, is to do an augmented reality navigation system based on real-time scene, people may know, driven a car in Beijing, so many primary road, secondary road, xizhimen overpass, for example Basic, just like the Logo of China Unicom. Augmented reality navigation system based on real-time scene, than a voice-based navigation or map navigation based on two-dimensional scenes. We think that driving should be a gradual process, from driving to active safety, to qualify under the condition of wheel, high wheel, business opportunities at every step. Is not a place we want to construct the system, but an integrated system of people and vehicles. Any car is like the relationship between people and horses, but horses can be controlled.

Finally, I would like to summarize, we believe that in the 5-10 years, the following three things will become inevitable: the first is that all devices are smart sensors, the second is that all devices must have the cloud the brain, and the third is all devices from a single function device connection and service nodes. We can see the cell phone has gone through this process, the phone is a phone in the past, today's cell phone, you make a few phone calls a day. From this perspective, all end up becoming a singular robot systems: it has perceived, understood, decisions.

Our technical staff often feel that will become very powerful machine is a very cool thing, but it really had no great value. Like the old blue, likely to do well than people in a scene, but didn't have more influence in the world. Search engines like Google and Baidu, which reduce the distance between people and information, it generates great social value, to be able to achieve great commercial value. This technique is great, does not lie in the machine even greater, but for every ordinary person to become creative, becomes greater.

Everyone is familiar with this sentence, I changed a bit: the world is ours, robots, but ultimately belongs to the person in control of a robot. Thank you very much!

1151 votes

Xinjiang Matrice 100 open flying platforms

DJI Matrice 100 open flying platform is designed to provide innovative application developers in the field of UAV development platform, it is not for ordinary consumers. Matrice is equipped with several communication interfaces, power supplies, and extension, users can install various types of equipment on the platform and to obtain flight data and control mechanisms, loading 1kg 20 minutes flying time, carrying extra batteries can be up to 40 minutes.

View details of the voting >>